Existential Risks: AI

Some people worry that superintelligent computers might pose an existential threat to humanity. Maybe an advanced AI will for some reason decide that it is advantageous to eliminate all humans, and we won't be able to stop it, because it will be much more intelligent than us.

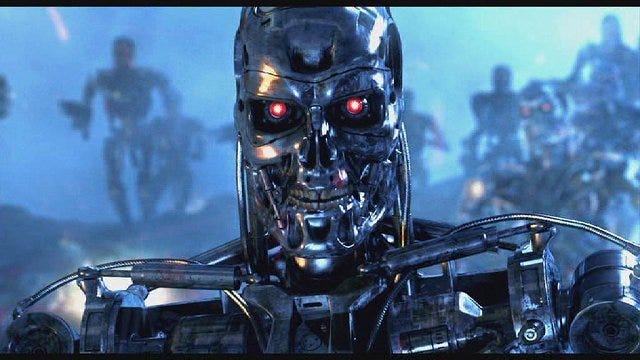

When journalists write about this sort of thing, they traditionally include pictures of the Terminator robot from the movie of the same name -- so I've gone ahead and followed the tradition. I gather that this annoys AI researchers. (As in this news story, which claims that a quarter of IT workers believe that Skynet is coming: betanews.com/2015/07/13/over-a-quarter-of-it-workers-believe-terminators-skynet-will-happen-one-day/. That story is typical media alarmist BS, btw. The headline is completely bogus.)

I have no expertise on this topic. Nevertheless, I'm going to relate my thoughts on it anyway.

(1) Consciousness

It seems to be an article of faith in philosophy of mind and AI research that, as soon as they become sophisticated enough at information-processing, computers will literally be conscious, just as you and I are. I personally have near-zero credence in this. I think there is basically no reason at all to believe it.

This, however, does not mitigate the existential risk. A non-conscious AI can still have enormous power and could still cause great damage. If anything, its lack of consciousness might accentuate the threat. Genuine consciousness might enable a machine to understand what is valuable, to understand the badness of pain and suffering, and so on -- which might prevent it from doing horrible things.

Though I don't think conscious AI is on the horizon, I do think we are, obviously, going to have computer systems doing increasingly sophisticated information-processing, which will do many human-like tasks, and do them better than all or nearly all humans. These systems are going to be so sophisticated as to be essentially beyond normal human understanding. It is possible that some of them will get out of our control and do things they were not intended to do.

(2) Computers Are Not People Too

However, the way that most people imagine AI posing a threat -- like in science fiction stories that have human-robot wars -- is anthropomorphic and not realistic. People imagine robots that are motivated like humans -- e.g., megalomaniacal robots that want to take over the world. Or they imagine robots being afraid of humans and attacking out of fear. Or robots that try to exterminate us because they think we are "inferior".

Advanced AI won't have human-like motivations at all -- unless we for some reason program it to be so. (In my view, it won't literally have any motivations whatsoever. So to rephrase my point: AI won't simulate humanlike motivations, unless we program it to do so. Henceforth, I won't repeat such tedious qualifications.) I don't know why we would program an AI to act like a megalomaniac, so I don't think that will happen. It won't even have an instinct of self-preservation, unless we decide to program that. It won't 'experience fear' at the prospect of its destruction by us, unless we program that. Etc.

The desire to take over the world is a peculiarly human obsession. Even more specifically, it is a peculiarly male human obsession. Pretty much 100% of people who have tried to take over the world have been men. The reason for this lies in evolutionary psychology (social power led to greater mating opportunities for males, in our evolutionary past). AI won't be subject to these evolutionary, biological imperatives.

(3) Bugs

But that does not mean that nothing bad is likely to happen. The more general worry that we should have is programming mistakes, or unintended consequences.

Computers will follow the algorithm that we program into them. We can know with near certainty that they will flawlessly follow that algorithm. The problem is that we cannot anticipate all the consequences of a given algorithm, in all possible circumstances in which it might be applied -- especially if it is an incredibly complicated algorithm, as would be the case for an AI program -- and in those cases where an algorithm implies 'absurd' results, results that are obviously not what the designer intended, a computer has no capacity whatsoever to see that. It still just follows the algorithm. (Again, that's because it is not conscious and has no mental states at all.)

Nick Bostrom gives the example of a computer system built to make paperclips more efficiently. The computer might improve its own intelligence, eventually figuring out how to escape human control and convert most of the Earth's mass into paperclips. This would have human extinction as an unfortunate side effect.

Now, I don't think that specific example is likely, nor is it a typical example of the sort of unintended consequences that algorithms have. The usual unintended consequences would be more complicated, so not very good for making points in blog posts.

The point of concern, in my view, is that when we write down a general, exceptionless rule, that rule almost always turns out to have some absurd consequences in some circumstances. With a computer program, you can't just put in a clause that says, "unless this turns out to imply something obviously crazy." You can't rule out the obviously crazy unless you first have a complete and perfectly precise definition of that.

(4) Crashing

So, I think the simple problem of unintended consequences is a more realistic concern than the worry that AI is going to get its own ideas and decide that it hates us, that it wants political power, that we're inferior, etc.

But in fact, I still don't think this is all that big of a threat. The main reason is that, when programs malfunction -- when the complicated algorithm the computer is following has an unintended consequence -- what almost always happens is that the program crashes, that is, it just shuts down. Or it does some bizarre, random thing that seemingly makes no sense.

Now, that could be very bad if, say, your program is in charge of a robotic surgery, or air traffic control, etc. But it isn't going to start acting like an agent coherently pursuing some different goal (which is how things go in the sci-fi stories).

So when designing AI, I think we need to have backup systems to take over in case the main system crashes.

(5) The Real Threat

So far, I seem to be minimizing the purported existential threat of AI. But now, actually, I think there is a serious existential risk that has something to do with AI. But it's not the AI that would be out to get us. It is human beings that would be at the core of the threat.

The danger posed to humanity by humans is nowhere near as speculative as the usual out-of-control-AI scenarios. We know humans are dangerous, because we have many real cases of humans killing large numbers of human beings. Right now, there are people who quite seriously believe that it would be good if humanity became extinct. (A surprisingly plausible argument could be made for that!) There are others who would gladly kill all members of particular countries, or particular religious or ethnic groups. There are probably millions of people in the world who would like to kill all Jews, or all Americans.

Most of these crazy humans fail to trigger genocides and other catastrophes because they simply lack the power to do so, and/or they do not know how to do it.

So here I come to the real AI threat. It is that AI may make dangerous humans even more dangerous. Crazy humans may use sophisticated computer systems to help them figure out how to cause enormous amounts of harm.

Military AI

And here is why I don't think the Skynet/Terminator story is so silly, after all. In the Terminator stories, Skynet starts as a military defense system. I think it's completely plausible that the military will use AI to help control military systems and help figure out the best strategies for killing people. If one country does it, other countries will do it too.

I also think it's not implausible that countries might be almost forced to give control of their military systems to computers -- or else face crushing military disadvantage to other nations.

A simple example: suppose there was a computer-controlled fighter jet in a dogfight with a human-controlled jet. The computer can perform 3 billion calculations in one second; the human can do about three. Against a good program, the human is simply going to have no chance. Hence, every military would be forced to put its airplanes under computer control.

A similar point might apply to the rest of the military forces of a nation. What if we were in another cold war with Russia? But this time, technology is so advanced that, if the Russians launch a nuclear attack on the U.S., the U.S. President would have only two minutes, once the Russian missiles were detected, to decide how to respond. If that were known to be the situation, it would be very tempting to place the U.S. nuclear arsenal under computer control. If both nations put their nuclear arsenals under computer control, then AI-prompted Armageddon starts to seem more likely. (I note that during the Cold War, there were several cases in which the U.S. and Soviet Union actually came close to nuclear war, and some involved computer errors and other false detections of missile launches. https://www.ucsusa.org/sites/default/files/attach/2015/04/Close%20Calls%20with%20Nuclear%20Weapons.pdf)

So there are two threats: governments of powerful nations may kill us with AI-controlled military systems (possibly by mistake). Or terrorists may use AI to figure out how to kill us, because they're crazy.

Of course, we can use AI to defend against hostile AI. But destruction usually has an advantage over protection. (It's easier to destroy, there are more ways of doing it, etc.) So the hostile AI's will just have a big, unfair advantage.

AI in a box

Some of the discussion of the AI existential risk has to do with whether we humans could keep a sophisticated AI under control, or whether it would escape from us and then possibly destroy us. There is a sort of game in which two people role play as a human and an AI, and the AI tries to talk the human into letting it (the AI) have access to the outside world (the internet, etc.). Half the time, the AI succeeds, even against human players who are initially convinced of the dangers of AI (https://en.wikipedia.org/wiki/AI_box). A real superintelligent AI would have better odds.

But I think we don't need to debate that, because if humans knew how to make an AI sophisticated enough to extinguish us, and it were reasonably easy and affordable, some human beings would create an AI, deliberately program it to cause maximum destruction, and release it.

This point renders moot almost every safeguard that you might think of to prevent AI from causing mass destruction. A human will just disable the safeguard, or deliberately create an AI without it.

As I suggested in a previous post ("We Are Doomed"), we will have to hope that humans become less crazy and evil, before our technology advances enough to make it very easy to destroy everything. This is something of a faint hope, though, because it only takes one crazy person with access to a powerful technology to wreak mass destruction.