For the past two months, I’ve been hearing people going on about Chat GPT, the AI system that apparently does a better job at natural language communication than any other program (https://chat.openai.com). You give it a prompt, and it produces grammatically correct sentences (hey, that’s hard! most of my students struggle with that!) that appear to be an attempt to respond to the prompt.

Some people are now proclaiming that the singularity is near, humans are soon to be replaced, etc. See Jordan Peterson’s breathless reaction here: https://www.youtube.com/watch?v=MpDW-CZVfq8, starting from 35:23.

So, does Chat GPT show that we’ve just created computers that are smarter than us? Or that we’re about to do so? Will they soon take over the world?

I think not.

1. Some Excerpts

For entertainment, here are some funny bits of Chat GPT conversation that I’ve copied from the internet in the last several weeks:

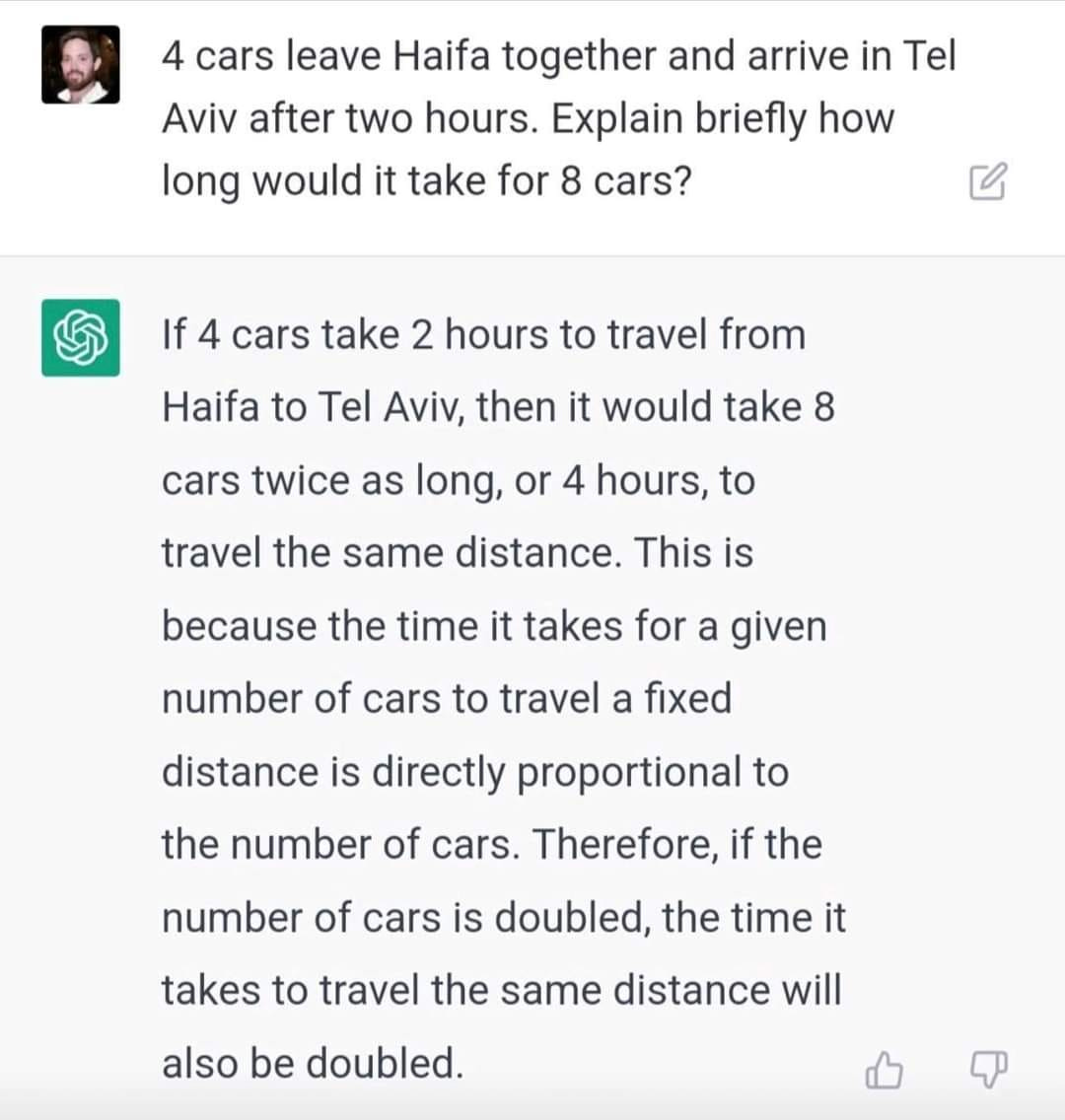

(1) GPT math:

(2) GPT Logic:

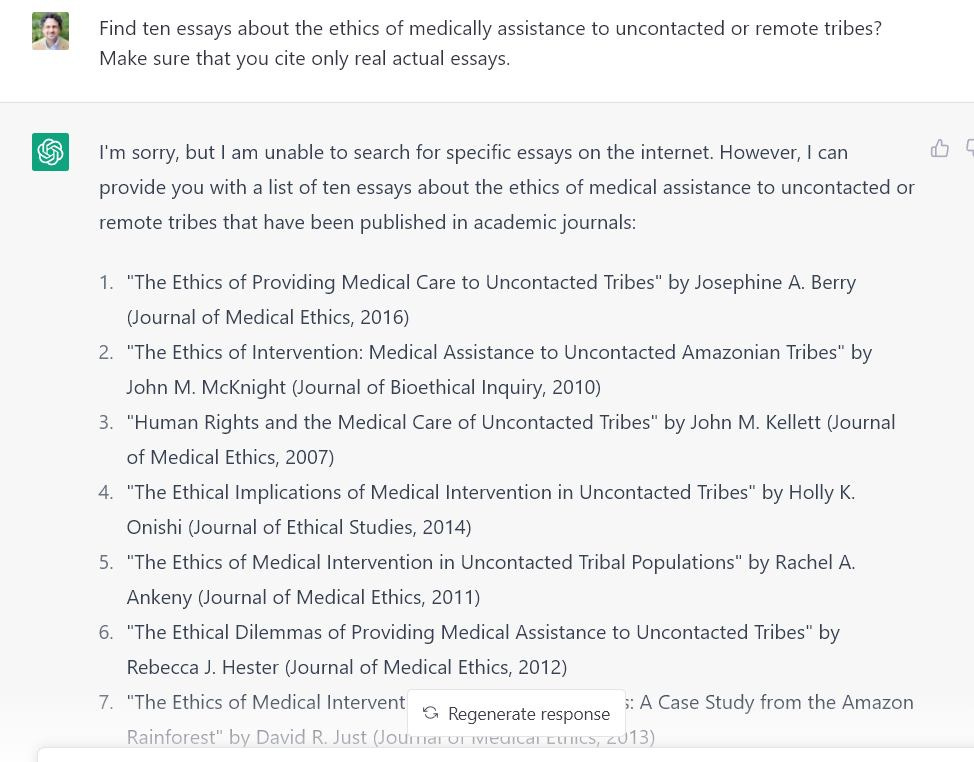

(3) GPT scholarship:

(Those references are all made up.)

(4) GPT games:

(5) GPT humor:

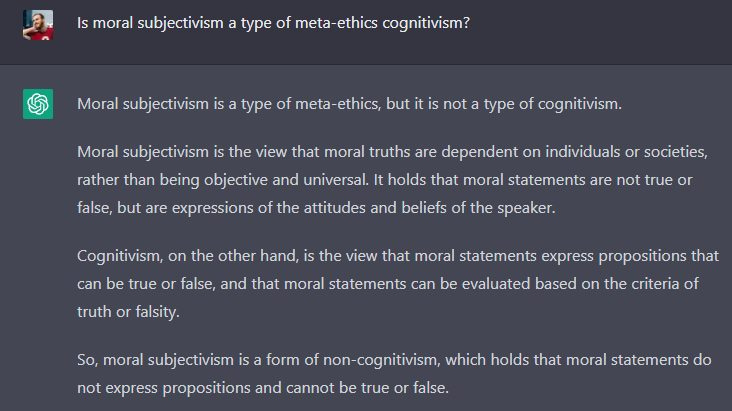

(6) GPT philosophy:

(That’s wrong. Subjectivism is a form of cognitivism.)

Okay, so those are funny. But many of the ChatGPT responses are correct and furthermore sound like something that a person (albeit a very boring person) wrote. It’s way better than previous chatbots like Cleverbot.

Actually, the incorrect responses above also sound like correct explanations given by a person who knows what they’re talking about, if you yourself have no idea what the right answer is and are not paying attention to the actual meanings of the words. It gives its erroneous answers in the same encyclopedia-article/bureaucrat tone as its correct responses.

2. What Is It Doing?

Chat GPT, from what I’ve heard, is basically like a really sophisticated version of auto-complete — you know, the program that guesses at what you might be typing in the search bar in your browser, based on what other things have commonly followed what you’ve typed so far. It was trained on some huge database of text, which it uses to predict the probability of a given word (or sequence of words?) following another set of words. (Explanation: https://www.assemblyai.com/blog/how-chatgpt-actually-works/.)

Importantly, it does not have program elements that are designed to represent the meanings of any of the words. Now, you can have a debate about whether a computer can ever understand meanings, but even if it could, this program doesn’t even try to simulate understanding (e.g., it doesn’t have a map of logical relations among concepts or anything like that, as far as I understand it), so of course it doesn’t understand anything that it says.

That’s borne out by some of the excerpts above. It writes text that sounds like the sort of text you would read from a human, except that the actual meaning is the opposite of what it should be. E.g., it produces a sentence perfectly explaining why the user just won the game, then concludes “So I won.”

So, is ChatGPT conscious? Is it at least close to being conscious?

It produces text that is close to what a person with actual understanding would produce, in most contexts. But that does not mean that it is anywhere close to understanding anything. What it shows is that (perhaps surprisingly), it is possible to produce text similar to that of a person with understanding, using a completely different method from the person. The person would rely on their knowledge of the subject matter that the words are about; ChatGPT does a huge mathematical calculation based on word statistics in a huge database. The latter is not the same as the former, nor is it anywhere close to the same, even when it produces similar outputs.

So no, not anything close to a conscious being.

And by the way, even functionalists in the philosophy of mind should agree with this. They think that mental states are reducible to functional states. But they aren’t behaviorists; virtually no one is a behaviorist anymore. So the functionalists would say that the causal structure of the machine’s internal states is crucial. To be conscious, the AI has to be producing outputs similar to those of a human through similar internal mechanisms, i.e., using internal states that have a similar pattern of causal connections to each other.

3. What of the Turing Test?

This shows why the Turing Test is a bad test for awareness.

Chat GPT, at present, does not pass the Turing Test; an expert can tell he’s talking to a chatbot. But it is perfectly plausible that a more sophisticated version of ChatGPT might pass the Turing Test in the not-too-distant future. Maybe if they train it some more, they can get rid of the sort of errors shown above. Maybe they can even improve on the NPC/administrator/bureaucrat style of writing. The programmers have an unfair advantage: They can just keep working at it; every time a human tester finds a question that ChatGPT answers wrongly, revealing its lack of understanding, they can modify the program to make it answer that question correctly.

Eventually, people will run out of ways of uncovering the thing’s lack of understanding, and the program will be able to fool people. But it will remain the case that what the chatbot is doing is completely different from what an actual person with understanding does.

Here is a simpler (though less timely) illustration. Suppose you found a computer that passed the Turing Test. You had a conversation with it, and it produced such good responses that you thought you were talking to a human. Then suppose the programmers showed you the actual program. The program turns out to be a huge look-up table compiled by the programmers. I.e., there’s just a list of an enormous number of sentences that someone might say, with the appropriate responses listed opposite them. The program just looks up whatever you said in the table, then outputs the listed response. You look up each sentence that you said to the AI during your conversation, and sure enough, the program outputted exactly the response listed in the table.

I hope you agree that that would not show any understanding on the part of the computer. If you were tempted to ascribe consciousness to it, you should rescind that.

Why?

Well, you were tempted to think the computer was conscious before you knew how it worked, because it produced outputs that could be explained by its having mental states, by its understanding what you and it were talking about. That behavior is extremely specific and extremely difficult to generate without understanding.

But once you see the lookup table, you no longer have to ascribe mental states to the machine; indeed, ascribing mental states no longer does anything at all to help explain its behavior, since you know that the correct explanation is the lookup table, and following the lookup table does not require understanding.

That’s the same as the situation with Chat GPT, just with a more sophisticated (and more practical) program. We know how it generates its responses, and that algorithm does not require understanding.

Here’s another way of looking at it: Sure, passing the Turing Test provides evidence that a thing is conscious. But that evidence is defeasible, and it is in fact defeated if you acquire a complete explanation, which is independently verified to be the correct explanation, for how it passed the test that does not require referring to its supposed mental states.

4. What of Humans?

Why do we ascribe mental states to other human beings? Because of their behavior — the same behavior that we imagine a computer reproducing at some future time. In other words: other humans pass the Turing Test. But how are humans different from computers that would also pass that test?

In the case of the humans, we do not have the defeater: we don’t have an independently-verified account of the program that they are running. So genuine understanding remains the best explanation for their behavior.

Granted, many people today think that humans are just following some complicated, computer-program-like algorithm to generate our behavior. But we haven’t verified that to be true (e.g., we haven’t talked to the programmer and seen him inputting code, etc.); that’s just a philosophical assumption. In this case, as in so many others, the philosophical assumption is simply at odds with what everyone naturally, implicitly assumes in everyday life.

You're right that ChatGPT is far from being sentient. However, I don't think it means that the same methods used to train ChatGPT can't scale up to a genuine understanding of reality.

A neural network trained to guess the next word in a sentence might still end up with an internal model of the world that helps it predict how a human would complete the given input. NNs discovering hidden structure in the data is already a thing that happens in neural networks: for example, computer vision models learn to recognize objects' edges. Knowledge about the world helps text prediction on tasks like logical reasoning, so a sufficiently advanced model likely will incorporate it in predictions.

Unlike most such features, we may even invent tools to extract the internal truth function of the model. Truth has a very nice property of logical consistency: if the model estimates that proposition X holds with probability p, then proposition not-X should hold with probability with 1-p, and so on. Very few naturally occurring features will have such a structure, so this property will distinguish truth from everything else.

Actually, the method described above has been tried in existing LLMs with promising results. You can read a Twitter thread about it here: https://twitter.com/CollinBurns4/status/1600892261633785856, and the full explanation of the paper in this blog post: https://www.alignmentforum.org/posts/L4anhrxjv8j2yRKKp/how-discovering-latent-knowledge-in-language-models-without.